Imagine someone wants to defraud your company and has access to your emails (or your supplier’s emails). Now imagine how much damage they could do if artificial intelligence (AI) allowed them to automatically identify sensitive financial documents and swap in fraudulent details.

Unfortunately for finance teams, these AI capabilities already exist. Security researchers have uncovered an AI tool capable of quickly analyzing compromised emails, identifying those with invoices or payment details, and altering the banking information. Then, the tampered invoice is replaced in the original message or sent to a preset contact list.

The end result? Finance teams are about to be inundated with a much bigger volume of fraud attempts, ones that use far more sophisticated tactics and harder-to-detect, phony documents.

To be clear, the core methods described here aren’t new. They’ve been around for a while in business email compromise (BEC) attacks and wire fraud. What’s new is the mind-boggling speed and efficiency made possible through AI. Let's look at why this is a game-changing threat for finance professionals.

An effortless way to alter bank details at scale

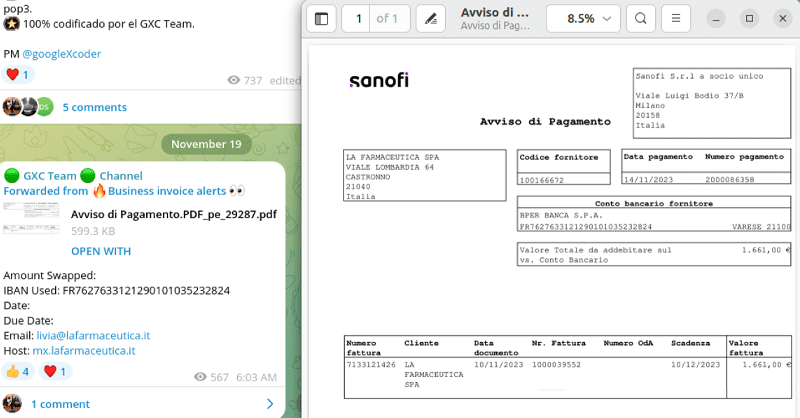

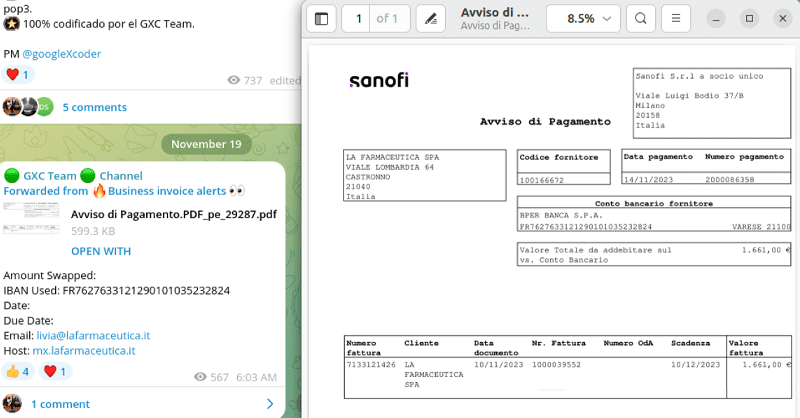

This “invoice swapper” tool has been used to create emails across Europe, including the UK, Spain, France, and Germany. This tool, operating through POP3/IMAP4 email protocols, scans compromised email accounts for messages containing invoices or payment details. Once it identifies those messages, it alters banking information to reroute payments to accounts controlled by the fraudsters.

The platform is equipped with options for configuring SMTP settings, making it easier to send a raft of fraudulent emails all at once. It also boasts a feature that sends reports to a Telegram channel, providing an innovative alternative to traditional command-and-control communication methods. This functionality includes updates about the generated invoices.

For operation, the tool requires a list of compromised email accounts, along with specific credentials and banking codes (IBAN and BIC) for the spoofing process. The tool then scans each account for potential invoices to modify, systematically replacing recipient information with fraudulent details.

Multilingual features: bigger cyber threats in any language

Worryingly, the tool features multi-language support, allowing it to process invoices in various languages automatically and without human intervention. It replaces the banking details on these invoices with those specified by the perpetrator, then either reinserts the altered invoice into the original email or distributes it to a preselected list of contacts.

This tool represents a significant advancement in the use of AI for criminal purposes, particularly wire fraud and bogus invoice scams. Its automated, multilingual capacity poses a considerable threat to businesses, especially given the tendency for staff to take familiar-looking invoices at face value.

It’s a numbers game – and you’re at a disadvantage

For finance teams, this type of tool turns fraud and cybercrime attempts into a matter of ‘when,’ not ‘if.’ It enables a volume of fraud attempts that's simply impossible to counter with traditional approaches.

It's not just an increase in the volume of attempts, though. The requirement for compromised email account credentials is part of a targeted approach. By focusing on specific accounts, often belonging to key financial personnel, the platform can significantly boost fraudsters’ success rate. Combined with other advanced features, this targeted approach places finance and AP professionals at a much higher risk of falling victim.

Plus, the use of SMTP settings for sending out fabricated invoices, as well as the Telegram channel feature for command-and-control communication, signifies a shift towards more advanced, less traceable methods of operation.

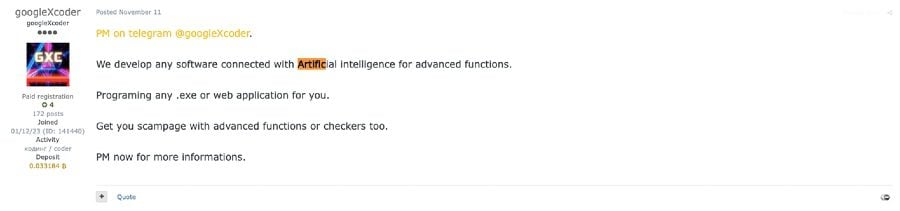

Unfortunately, this is just one of the new AI tools in a cybercriminal’s arsenal. While there are likely to be even more on the horizon, we already know of several different platforms explicitly designed and marketed for assisting criminal activities. And even tightly moderated LLMs like ChatGPT can be manipulated for nefarious reasons.

FraudGPT and WormGPT: These are marketed as "starter kits for cyber attackers" and offer a suite of resources for a subscription fee, making advanced tools more accessible to aspiring cybercriminals. FraudGPT is touted as being capable of fabricating "undetectable malware" and identifying websites susceptible to credit card fraud. Meanwhile, WormGPT is noted for its unlimited character support, chat memory retention, and exceptional grammar and code-formatting capabilities. Both can be used to write malicious code and create convincing phishing emails.

Generative AI in phishing campaigns: Even mainstream, moderated AI tools can help fraudsters automate the creation of hyper-personalized phishing emails in multiple languages, thereby increasing the likelihood of successful attacks. They also assist in accelerated open-source intelligence gathering and automated malware generation, streamlining the process for individuals without much technical expertise.

AI-driven malware creation: The FBI has warned that hackers are even using mainstream generative AI tools like ChatGPT to quickly create malicious code, enabling cybercrime sprees that would have taken far more effort in the past. This includes using AI voice generators to impersonate trusted individuals for fraud.

Use of ChatGPT lures on social media: Hackers are increasingly using ChatGPT-themed lures to spread malware across platforms like Facebook, Instagram, and WhatsApp. Malware like DuckTail and NodeStealer have been distributed using these AI-themed lures, with the goal of compromising business accounts and stealing sensitive information.

The invoice swapper tool by itself makes your organization vulnerable through the sheer scale and volume of fraud attempts – but even that is just a drop in the bucket compared to the potential for other AI tools to radically increase the number of attacks against your organization.

How can you protect your business?

The uncomfortable reality is that generative AI is advancing rapidly, creating bigger and more uncertain threats as the technology is used to refine itself and spawn new applications. Finance leaders will need a multi-faceted, layered approach to counterbalance cybercriminals’ growing advantages, including regular employee training.

But one of the most critical layers involves leveraging technology yourself. Fight fire with fire and look for automated ways to strengthen your controls and authentication measures, creating guardrails around the employees who are most likely to be targeted.