Only 1 in 10 finance leaders are ‘very confident’ they could stop an AI-powered cyberattack

Only 13% of finance leaders feel fully prepared for AI-powered cyberattacks. Discover where the real risks lie—and how to close the defense gap fast.

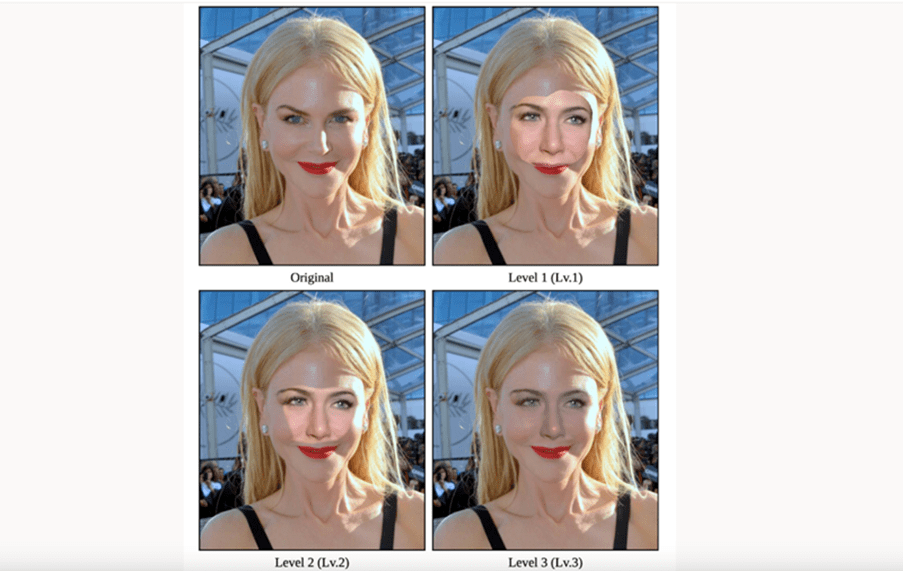

Deepfakes are a type of synthetic media, usually a video or image that convincingly depicts someone doing something they did not do. These artificial videos and images are usually created through generative artificial intelligence (AI). The term “deepfake AI” comes from the combination of “deep learning” and “fake” – coupled with the use of AI, they often lean on existing content to create unrecognisable changes to the original files and weave a convincing yet misleading story.

Deepfakes are created through deep learning, artificial intelligence, and machine learning to mimic a person. Although the practice of changing and editing photos and videos has been around for many years, the key difference is that deepfake technology can be applied to existing videos and images in a way that far surpasses simple image editing. For instance, the (fairly realistic) likenesses of celebrities such as Tom Cruise and Keanu Reeves have garnered millions of views on TikTok despite being artificial.

“The technology behind deepfakes is known as a generative adversarial network, or GAN. GANs consist of two neural networks, a generator, and a discriminator, which compete against each other to produce increasingly realistic outputs.”*

To simplify, the technology analyses a culmination of images and videos, then uses machine learning to mimic the movements and expressions through video replication. Once there’s a grasp on the visual, deepfake technology usually uses a similar process to grab voice notes to overlay seemingly accurate audio clips. Some content is so realistic, digital forensic experts struggle to differentiate what’s real and what’s AI generated.

So how hard is it to create deepfake videos? Unfortunately, it’s become increasingly more accessible. DeepFaceLab and Deepfakes Web are just two examples among hundreds of apps and software readily available to create compelling deepfakes.

Though they’ve technically been around since 2019, deepfakes have been increasingly showing up in media headlines. You may heard how a finance worker in Hong Kong recently lost $39 million to imposters using deepfake technology, or the latest viral scandal where deepfake pornographic images of Taylor Swift took the internet by storm after being shared on X (formerly Twitter) and Telegram. This one causing a more widespread shake-up about the use of deepfakes, noting that pornographic images make up 98% of artificially generated images and 99% of the victims are women.

Other popular and well-known deepfake examples include the Morgan Freeman video created by Dutch deepfake YouTube channel Diep Nep. This video, which still garners plenty of discussion and attention, was an eye-opening turning point in how truly convincing deepfake technology can be when impersonating well-known celebrities, and media personalities.

Speaking of impersonation, another popular and unfortunate real-life scenario includes the deepfake video of journalist Martin Lewis promoting Elon Musk’s new trading platform, Quantum AI. The video was a scam which very quickly went viral, resulting in people losing thousands of dollars to a fake investment opportunity. One Australian man lost $80,000 of cryptocurrency after viewing the viral video promoting the new platform.

WARNING. THIS IS A SCAM BY CRIMINALS TRYING TO STEAL MONEY. PLS SHARE.

This is frightening, it’s the first deep fake video scam I’ve seen with me in it. Govt & regulators must step up to stop big tech publishing such dangerous fakes. People’ll lose money and it’ll ruin lives. https://t.co/ZzaBELg1kg

— Martin Lewis (@MartinSLewis) July 6, 2023

Although much of what we see in the media focuses on the dark side of deepfake technology, it’s not all doom and gloom. Some positive use cases for deepfake technology include education, where universities and schools are looking for ways to use the technology to create a more engaging and immersive learning experience.

Deepfake technology has also become a major topic of discussion when it comes to augmenting cinema, storytelling and video games. Creating an even more immersive experience, deepfake technology could see gamers avatars mimicking their facial movements and proving a voiceover to match the character they’re playing – for example, a Luke Skywalker.

Not only is this expected to elevate the gaming experience, but another silver lining is also the expectation it may reduce a lot of harassment against the transgender community by allowing them to mask their voices to their gender identity. Surprisingly, last year, almost half of gamers had experienced harassment via voice chat while playing.

The falsified explicit images of Taylor Swift referenced earlier in this article have caused a stir about the need to regulate and criminalize the creating of deepfake images. Although we’ve already pointed out that deepfakes are not always used with malicious intent, there’s plenty to be said for the harm inflicted on victims if deepfake technology is used to misinform, scam or misrepresent others.

Regulators are looking closely at ways to implement legislation around the use of deepfakes. In the US, there are currently no federal laws to prohibit the creation or sharing of these images or videos. However, as of January 2024, there had been a proposal to implement a No Artificial Intelligence Fake Replicas And Unauthorized Duplications (No AI FRAUD) Act. This act takes a stance against the using a digital replica of a person without their permission, both appearance and voice.

At the state level, however, some have implemented legislation against the use of deepfakes, including California and Texas, among others.

In Australia, there are no laws prohibiting the use of deepfakes, but the country does have strict penalties against the non-consenting use and distribution of pornographic imagery. This can be linked to the use of deepfake technology to create imagery as well.

As mentioned above, deepfakes are becoming increasingly popular and accessible, especially in the corporate space. According to an .

The biggest target for these crimes? C-level executives and notably, finance professionals. Deepfakes are contributing to the growing cost of cyber scams, with criminals using deepfake technology to humiliate or harass business leaders, leaning on practices such as doxxing to cause personal and professional harm.*

It’s important to question any suspicious activity and perform due diligence when it comes to information and security – especially if there’s any financial risk at play. If you’re concerned about something you’ve seen online or an interaction you had over video call or the phone, report it to your local authorities and, depending on your risk level, seek legal advice.

You can also report videos or images online to have platforms review and potentially remove them if they seem to be misleading or compromising.

There’s several ways individuals and businesses can protect themselves, including:

Only 13% of finance leaders feel fully prepared for AI-powered cyberattacks. Discover where the real risks lie—and how to close the defense gap fast.

AI voice scams are targeting finance teams—using deepfake tech to mimic executives and authorise payments. Learn how they work—and how to stop them.

Discover 14 real-world AI-driven tax scams targeting US finance teams this season—what they look like, how they work, and how to stop them in action.

Eftsure provides continuous control monitoring to protect your eft payments. Our multi-factor verification approach protects your organisation from financial loss due to cybercrime, fraud and error.