Payment Security 101

Learn about payment fraud and how to prevent it

AI & financial risk: How finance leaders can prepare for a post-truth future. Our cybersecurity guide is packed with stats all finance professionals should know with a well-researched recap of how the AI landscape is accelerating cyber threats this year.

In last year’s edition, we asked this question: despite ballooning security budgets and closer scrutiny, why are so many business communities still seeing unprecedented losses to scams and cybercrime?

This year, the proliferation of generative artificial intelligence (AI) has created perhaps an even more urgent question: if businesses were already facing unprecedented cyber losses, how will they fare now that AI is equipping cybercriminals with powerful capabilities and rearranging notions of evidence and truth? This guide attempts to answer that question by examining generative AI and its impacts on the current threat landscape for AP and finance teams – as well as the novel, uncharted threats that are already looming on the horizon.

There’s a lot we don’t yet know about generative AI, but what we do know is that its pace of evolution is already outstripping laws, regulations and societal norms. Are finance leaders prepared for a post-trust future?

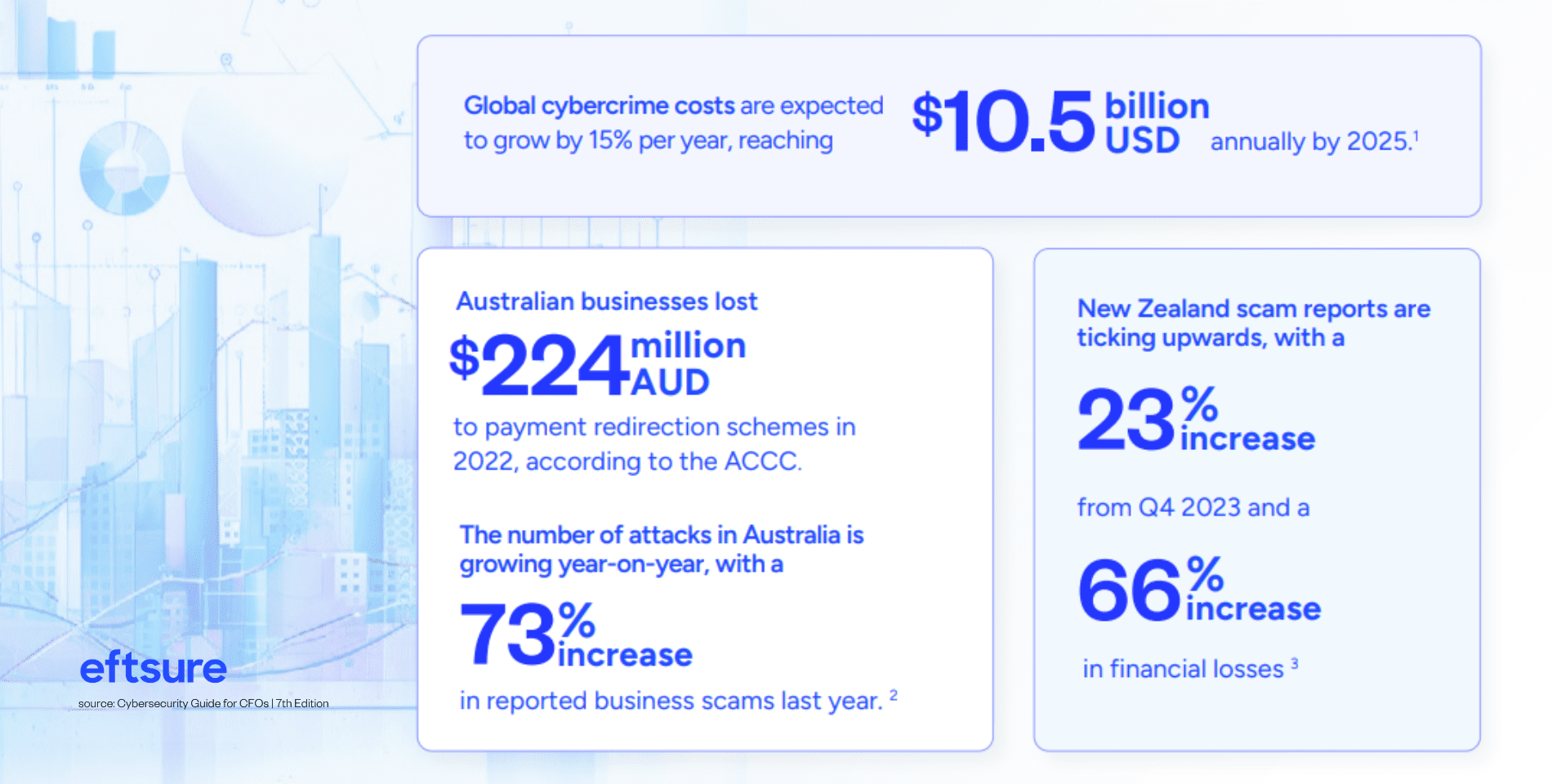

This data only tells part of the story, though – incidents are likely underreported and the lasting effects on a business are often underestimated. For example, the $224 million reported losses in Australia only include incidents reported to Scamwatch, ReportCyber and the AFCX. Eftsure survey data indicates that there is no primary reporting authority according to most financial leaders, with more than one in four saying they weren’t sure whether fraud incidents were reported at all.

According to 2023 data from the Australian Competition and Consumer Commission (ACCC), the largest losses for businesses were a result of payment redirection schemes, also called business email compromise (BEC) attacks.2 In this type of attack, scammers infiltrate and weaponise email accounts to manipulate an accounts payable (AP) officer into making a fraudulent payment.

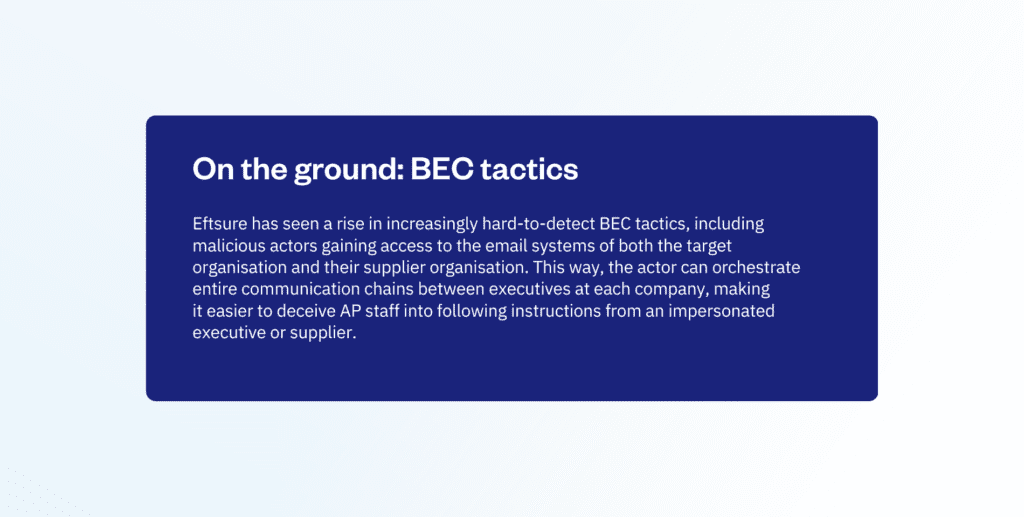

For instance, a threat actor might gain access to the email systems of one of your suppliers. From there, they can persuade your organisation’s employees to change payment details or make a fraudulent payment into the threat actor’s account. Even if your own cybersecurity defences are strong, the attack leverages weaknesses elsewhere in the supply chain and capitalises on human error.

This vulnerability is significant because banks don’t reconcile the names of the recipients to the account and bank state branch (BSB) number.

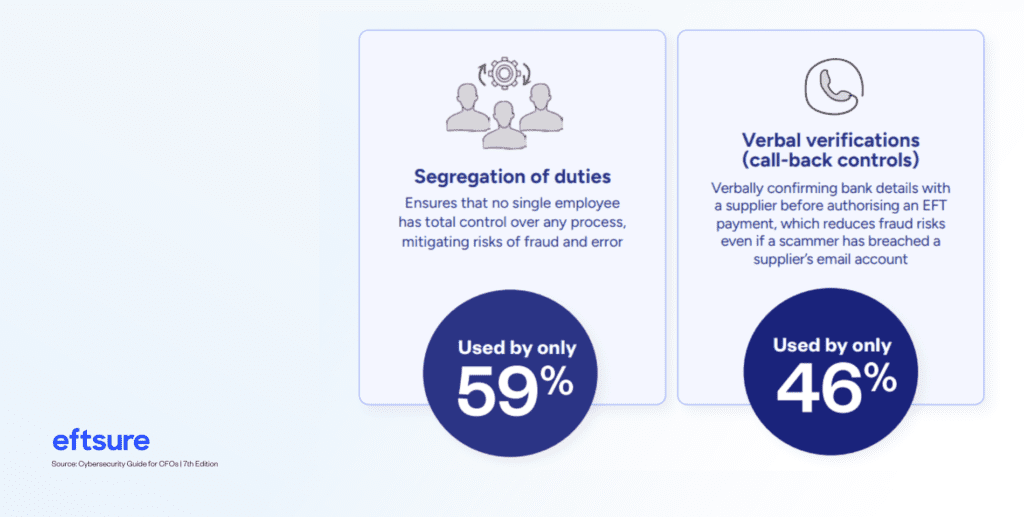

Despite the prevalence and growing sophistication of BECs, Eftsure data reveals that many organisations are foregoing the controls that tend to be more effective in thwarting BEC attacks.

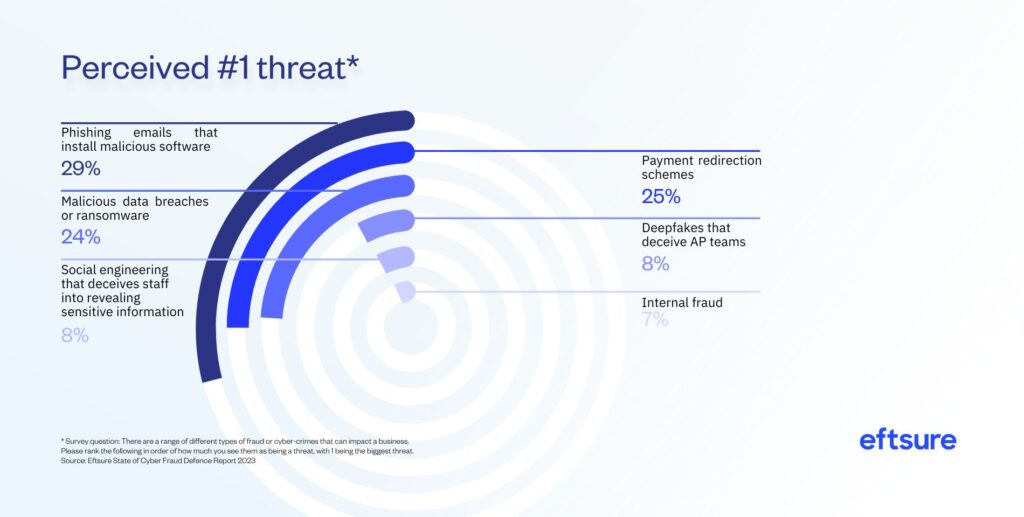

Eftsure’s data shows that finance leaders are aware of broader cyber threats, even though specific anti-fraud controls aren’t always tailored to cyber threats like BECs.

Notably, respondents’ broader concerns about global cybercrime tend to soften once they’re asked about fraud risks within their organisations, and many do not rank deepfake audio or video (AI-generated synthetic media that imitates real faces or voices) as major threats.

The bottom line: even without the rapid technological advances we saw in 2023, finance teams were vulnerable to common cyber attacks and digital fraud tactics. Where does that leave finance teams now that those technological advances are accelerating?

“From my point of view, if you don’t take AI seriously, if you don’t take cybersecurity seriously… then I don’t believe you’ll be here in the next five years.” – Bastien Treptel, co-founder of CTRL Group

In early 2023, OpenAI’s ChatGPT became the world’s fastest-growing consumer application in history, amassing 100 million users in only two months.6 Other generative AI companies attracted similar crowds of users and massive investments from some of the world’s biggest tech companies, while each model catalysed a flurry of iterations built for specialised use cases.

There are now multiple, fast-expanding ecosystems of generative AI tools. Some leaders have heralded the fast evolution and widespread accessibility as a transformative technological revolution, while others have warned of unpredictable, irrevocable changes to all of society and even the fate of humanity.

Let’s start somewhere smaller than the fate Machine learning is a subset of AI that of humanity – namely, what we mean when develops how computers learn and make we talk about AI. AI is a broad field that aims predictions or decisions based on data. to create systems capable of performing Instead of being explicitly programmed to tasks that typically require human intelligence. perform a specific task, these algorithms use These tasks include – but aren’t limited to – statistical techniques to learn patterns in data. understanding natural language, recognising patterns, solving problems and making decisions.

Generative AI is a subset of AI, often using machine learning techniques, that focuses on generating new content. Where it’s distinct from other forms of AI is that it generates content like text, images or sound – content that is entirely new and was not part of the original dataset used for training.

How does it work? Generative AI uses neural networks, which essentially teach machines to process data similarly to human brains. Two neural networks can form a generative adversarial network (GAN), in which one network generates new data and the other network assesses the quality of that data.

These two networks build off one another, continuously refining the generator network’s output based on feedback from the other network.

This content generation can span multiple modalities and techniques. Large language models (LLMs) are a subset of generative AI specifically focused on generating and understanding text. LLMs are trained on vast amounts of textual data, enabling them to predict and generate human language.

Examples include OpenAI’s GPT series (like GPT-3 or GPT-4) and BERT by Google. While all LLMs are generative AI when used to produce text, not all generative AI systems are LLMs, as many generate non-textual content.

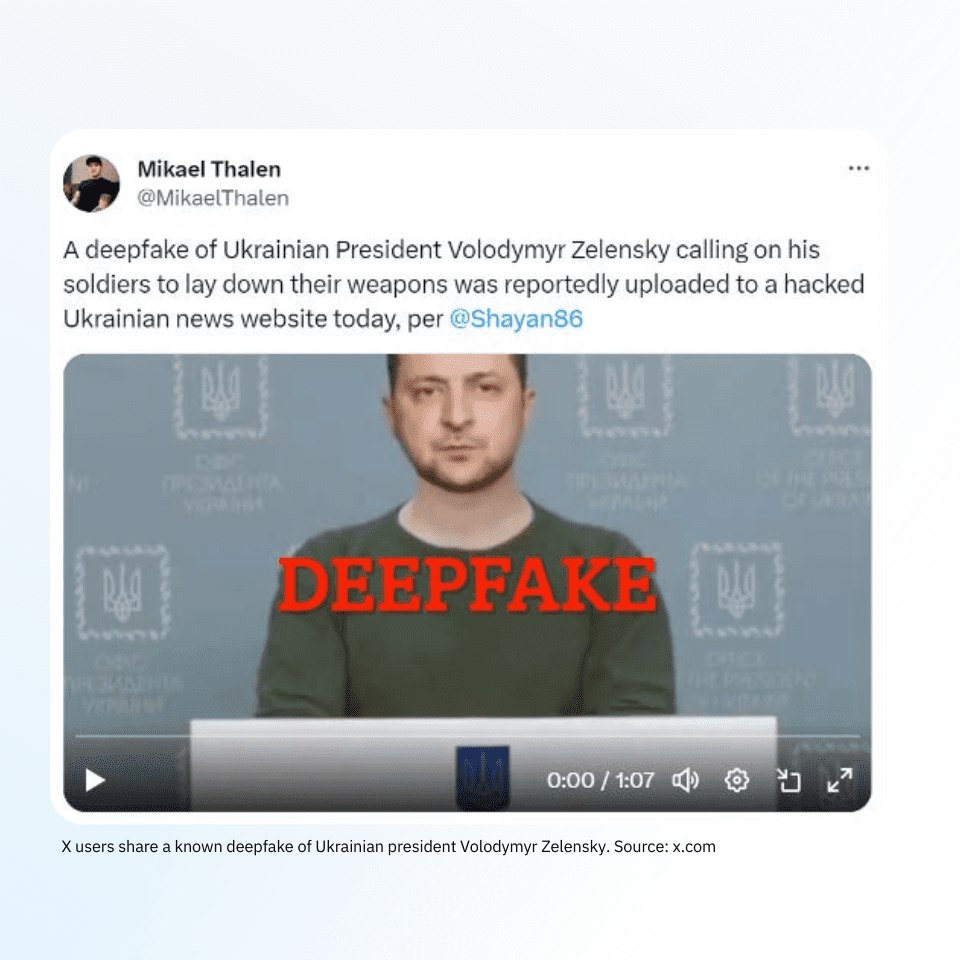

Deepfakes refer to AI-generated synthetic media (video, audio or images) in which simulated ones replace the appearance of real people.

While GANs are frequently used to create deepfakes, other neural network architectures can also be employed. Deepfakes involve training on a dataset of target videos to replace one person’s likeness with another’s, often leading to highly convincing, fabricated media.

Whether it’s deepfake audio calls or polished-sounding phishing emails, broader access to generative AI tools has opened a new world of scalable attacks and synthetic media. In other words, scammers have a broader array of tools than ever.

But, to start building resilient and flexible defences now, finance leaders need to understand specific applications. The following section will examine how cybercriminals already use AI to circumvent financial controls – and what’s next.

“This is the year that all content-based verification breaks. And our institutions haven’t had time to catch up with this change.” – Aza Taskin, co-founder of the Centre for Humane Technology

There’s a wide range of positive, promising applications of generative AI: faster, more accurate diagnostics in healthcare, advanced manufacturing, more efficient and sustainable agriculture, safer and smarter digital cities, to name a few.

Even in the cybersecurity arena, specialists are already leveraging AI tools to protect organisations’ systems and data. In October 2023, Commonwealth Bank’s General Manager of Cyber Security, Andrew Pade, explained that security teams have been using generative AI to identify threats in real time and create deceptive technologies that act as honeypots for threat actors.*

However, AI is assisting both defenders and attackers. After all, cybercriminals have access to the same AI tools and technologies – and then some. Unlike governments and businesses that are bound by regulations, privacy rules or security restrictions, threat actors are unfettered in their use of both mainstream and black-market AI tools.

It’s critical to understand how these capabilities can be used against finance professionals.

LLMs like ChatGPT are trained on vast amounts of text data. When prompted with user input, they generate coherent and contextually relevant text by predicting the next word in a sequence, leveraging their extensive training to serve information and conversational responses. They can act as on-demand virtual assistants, analyse spreadsheets or generate dentist bios in the voice of Christopher Walken.

They’re also helping threat actors perfect their cyber attacks. Grammatical mistakes are a common warning sign of a scam attempt, while impersonation attempts can be foiled by email wording that sounds unnatural or out-of-character. Now, mainstream AI tools like ChatGPT can help attackers sidestep these issues and create more polished, more effective phishing messages or BEC attacks.

For instance, Ryan Kalember – Vice President of Cybersecurity Strategy at email security company Proofpoint – has explained that LLMs are sparking major BEC upticks in markets that were previously off-limits due to language and cultural understanding barriers, such as Japan.

“BECs used to be a contentious topic in Japan because the attacker did not speak Japanese and didn’t understand business customs. Now the language and cultural understanding barriers [are] gone… and [attacks] are increasing in volume.” – Ryan Kalember, VP of Cybersecurity Strategy, Proofpoint*

Kalember also points out potential AI use cases for a multi-persona phishing tactic explored by Proofpoint research, in which threat actors masquerade as different parties within a single message thread.10 Leveraging the concept of social proof, this tactic uses benign, multi-persona conversations to win a target’s trust and eventually persuade them to click on malicious links, give away sensitive information or process fraudulent payments.

Eftsure has previously flagged similar tactics in foiled fraud attempts, with attackers orchestrating back-and-forth ‘conversations’ after compromising the email systems of both the target organisation and supplier organisation. While the basic structure of a BEC attack or phishing attempt remains the same, AI tools are making these more advanced tactics easier and more scalable.

“Some of the scarier tools are being birthed out of the dark web. You break into an email server, and then the AI goes and reads all the conversations and tells you how to best scam this organisation. I don’t think Australian businesses are quite yet ready for that” – Bastien Treptel, co-founder of CTRL Group*

Even heavily moderated AI tools like ChatGPT can give threat actors a crucial edge. Similar tools are spreading across black markets on the dark web, except these models are specifically designed to aid criminal activities and may be trained on datasets that include phishing messages and malware code.

WormGPT and FraudGPT are just a few examples of such tools. In July 2023, researchers from cybersecurity firm SlashNext uncovered the promotion of WormGPT as a “black hat alternative” to ChatGPT.12 Within a week, other researchers sounded the alarm about a similar tool, FraudGPT, circulating in Telegram channels.*

Later in 2023, Kaspersky researchers found counterfeit websites peddling fake access to WormGPT, illustrating the growing popularity of the tool.*

AI assistance isn’t just helping cybercriminals hone BECs or phishing messages, though. LLMs and other AI models can effectively act as super-assistants, crunching large datasets to find vulnerabilities, developing malicious code and brainstorming altogether new attack strategies. This is especially true for the blackmarket versions, which don’t have the same guardrails or regulations as ChatGPT or Bard.

Threat actors can access ever-widening pools of ill-gotten personal data on the dark web, but AI capabilities help spot patterns in these datasets and whittle down shortlists of the most vulnerable targets. Just as ChatGPT can help you devise new marketing strategies or ideas for a child’s birthday party, its malicious iterations can be used to brainstorm new approaches to infiltrate or de-fraud organisations and individuals.

This isn’t emerging technology or hypothetical risks – the threats are here, today. But there’s a potentially even more challenging threat on the horizon: synthetic media.

Massive losses result from deceptive tactics that take place entirely through text and email. The next frontier is deceptive tactics that use synthetic media, leveraging realistic faces and voices – or even the faces and voices of people you know.

Tracing synthetic media manipulation back to 2017 on an unsavoury adult Reddit forum, Queensland University of Technology researcher Lucas Whittaker and the co-authors of “Why managers should adopt a synthetic media incident response playbook in an age of falsity and synthetic” media explain how GANs can generate content to an “ultrarealistic standard.”*

That paper details a variety of business risks stemming from weaponised synthetic media, including reputational, economic, security and operational risks.

A CEO receives a call from someone posing as the boss of his parent company, in the leader’s signature German accent and tone, instructing the CEO to transfer an immediate payment to one of their suppliers.* A mother receives a call from a supposed kidnapper who has taken her daughter and is demanding a ransom payment, while her daughter’s voice pleads in the background.*

These aren’t science fiction plotlines. These are real-life scams, one of which took place in 2019, well before the proliferation of generative AI had lowered the barriers to accessing voice conversion technology.

Whittaker tells Eftsure that scams like the 2019 incident with the German CEO are “just the beginning of an increasing trend of voice conversion scams.

“We’re seeing increasingly widespread access to GAN-generated audio. Easily accessible voice cloning services generate pitch-perfect voice clones off of voice samples provided to the platform. [Multiple platforms] permit anyone to generate highly realistic speech audio from mere text input,” says Whittaker

“Lots of research focus is being placed on the development and optimisation of GAN models which specialise in vocal synthesis and conversion, so the synthetic voice output from openly available platforms will only become more realistic, expressive and seemingly human.

Scammers are already using AI-facilitated voice conversion scams to target individuals globally and also here in Australia.”

While there are fewer instances of scams that rely on synthetic video, it only took a few years for voice conversion scams to go from an outlier in the Wall Street Journal to a growing worldwide trend.

Finance leaders shouldn’t dismiss this risk when considering future threats and how to protect against them. Geographic dispersion is a reality for many organisations, their partners and their supply chains, making video calls a standard – and trusted – channel for verifying information. The ubiquity of recorded video calls also creates a large pool of data for threat actors to leverage if systems are breached.

The risks of synthetic media and LLMs are known. Threat actors are leveraging many of these capabilities right now, and it’s not hard to see how emerging capabilities like synthetic video can augment existing BEC or social engineering tactics.

The third area of risk within generative AI is what we do not currently know. While some dismiss new AI capabilities as overhyped buzzwords, others are warning that we simply don’t know where this technology might take us.

In the latter camp are Aza Raskin, co-founder of the Center for Humane Technology, and Tristan Harris, Executive Director. In their presentation “The AI Dilemma,” they argue for greater international regulation of generative AI, noting that current models can learn skills that even their creators didn’t anticipate.

“As we’ve talked to… AI researchers, what they tell us is that there is no way to know,” Raskin said. “We do not have the technology to know what else is in these models.”

Combined with the ultra-fast deployment of AI tools throughout the wider population, AI’s self-strengthening capabilities cause advances to occur on a “double exponential curve.” Harris and Raskin argue that the only way to ensure these advances don’t cause unforeseen catastrophes is to upgrade “our 19th-century laws, our 19th-century institutions for the 21st century.”

Even without the threat of synthetic media, many organisations are skipping vital antifraud controls and processes, potentially raising their risks of falling victim to cyber scams.

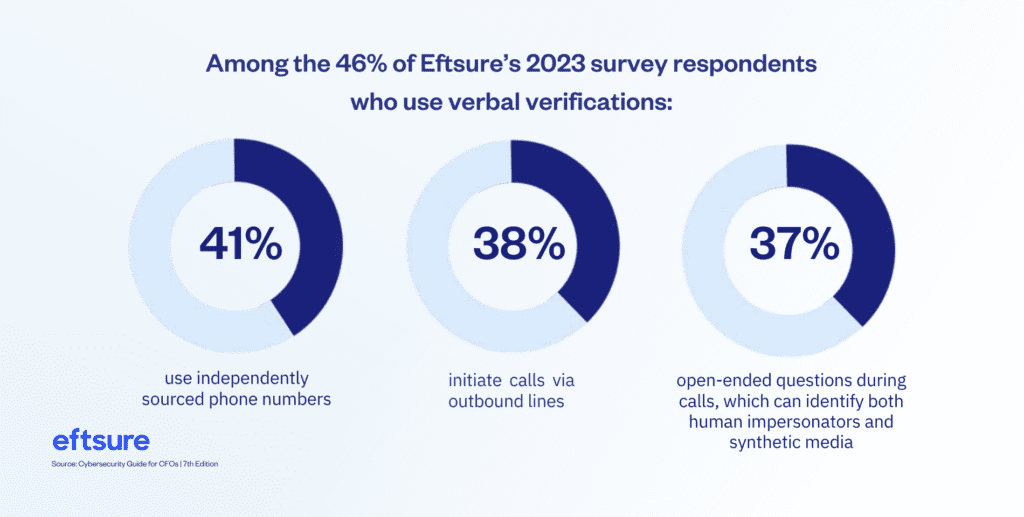

Eftsure found that verbal verifications, which could help weed out scam attempts that leverage voice conversion, are only used by less than half of organisations. Even among those who deploy this control, large portions are skipping best-practice approaches – which means existing vulnerabilities are likely to become more pronounced as voice conversion scams rise.*

You might look at existing deepfakes and question whether you or your employee would find them convincing. Some examples have an ‘uncanny valley’ effect or look otherwise unnatural. However, not only are outputs likely to become increasingly realistic, but experts say our brains are wired to believe synthetic media.

It’s already common for scammers to capitalise on employees’ busy schedules or cognitive load. For instance, malicious actors will target skeleton staff or employees who are about to go on leave. Other time-honoured tactics include urgency and pressure, such as impersonating a senior executive and telling an employee that a major deal is contingent on processing the payment within the next hour.

Whittaker says synthetic media plays on several similar cognitive biases and mental shortcuts, which could make it even harder to counter these tactics.

Limited awareness of deepfake capabilities.

“A lot of people aren’t aware of how realistic generative AI and deepfake generation can be,” says Whittaker, a claim supported by Eftsure’s survey data of finance professionals. “Those with limited awareness have no real cause to question synthetic content if it looks or sounds realistic enough. Deepfakes in particular can be deceptive given the richness of video content – we’re less inclined to systematically appraise sources of richer information like video content as it looks more credible due to the fact it appears more realistic.”

Familiarity heuristics.

“This is the tendency people have to trust people or objects they’re familiar with. Ifan employee is presented with a voice or face they recognise, like a coworker’s, their natural instinct is to trust the source of the information.”

Confirmation biases.

Whittaker also explains that we tend to place more credence in evidence that is consistent with our prior beliefs and expectations. “If an attacker uses information the employee already knows or believes to be true, it can make the employee more inclined to trust the attacker.”

Authority biases.

“Employees are more inclined to follow the instructions of those in positions in authority, potentially without the legitimacy of these instructions being questioned.” By imitating authority figures’ faces or voices, attackers can harness this powerful bias and circumvent precautions that an employee might otherwise take.

CFOs face a three-fold risk:

To address all three, CFOs will need a multi-layered mix of solutions.

According to Eftsure’s 2023 survey data, only about half of finance leaders say they’re aligning with technology specialists on training and strategy.

Even if you’ve already implemented a cybercrime strategy, most organisations will benefit from tighter alignment with cybersecurity practices and training. This strategy should encompass people, processes and technology.

Fortifying control procedures against today’s scams is a good starting point for protecting against tomorrow’s scams, too.

After all, when distilled into their most basic elements, most AI-enabled scams are still fundamentally the same BEC attacks or social engineering scams we’ve seen for decades – it’s just that AI tools can increase their volume, scale and efficiency, while synthetic media can make old-fashioned fraud attempts even more convincing.

Control procedures like segregation of duties and call-back controls are some of the strongest defences against these scams. Standardising and automating parts of these processes helps ensure that staff don’t cut corners, intentionally or unintentionally

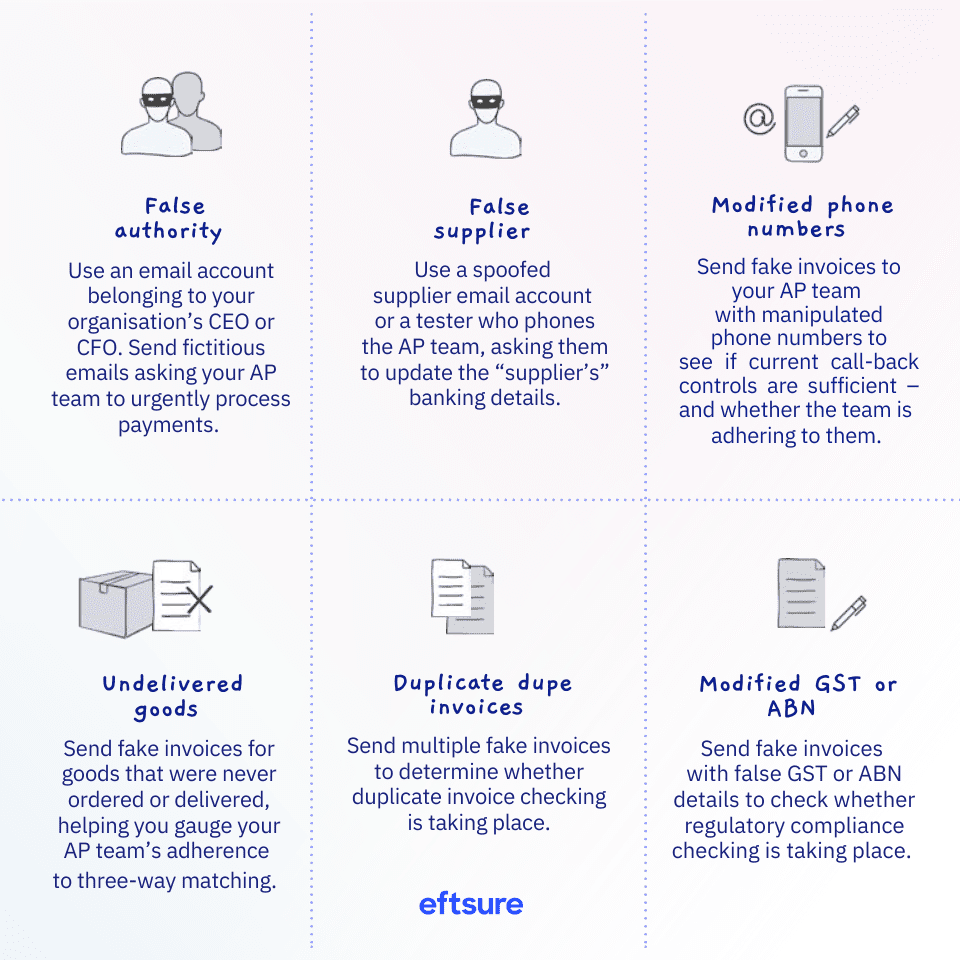

Threat actors tend to be more organised and aware of internal processes than some leaders realise. This is especially true now that AI tools can help them more quickly parse large datasets and communications after they’ve infiltrated a system. As a result, even if you already have existing controls in place, they need to be continuously pressure-tested to see how they stand up against evolving cyber threats.

Cybersecurity specialists use a practice known as penetration testing, in which security experts simulate cyberattacks on a system to identify vulnerabilities before malicious hackers can exploit them. Within a finance function, this looks like pressure- testing various controls to identify where there might be gaps in the process – this testing should happen while keeping in mind the potential for AI-honed messages or synthetic media.

Implement multi-factor authentication (MFA) on all accounts whenever possible, and ensure passwords are never duplicated or shared. Passwords should also be complex and software should be patched regularly.

This is another area that calls for a unified cybercrime strategy, since you’ll want to align with security professionals on training. Synthetic media risks and common fraud tactics should be learned alongside fundamental security hygiene, ensuring employees understand both the risks and how certain practices mitigate those risks.

Cybercriminals are already using technology to work smarter and faster – don’t cede the advantage. The right technology solutions can help you scale and automate processes without sacrificing efficiency.

Look for solutions that can act as guardrails for critical steps like payment processing, especially those that integrate with your existing tech stack and help make employee experiences easier or more seamless. No solution or procedure will be a panacea, so opt for technology that facilitates resilience and adaptability.

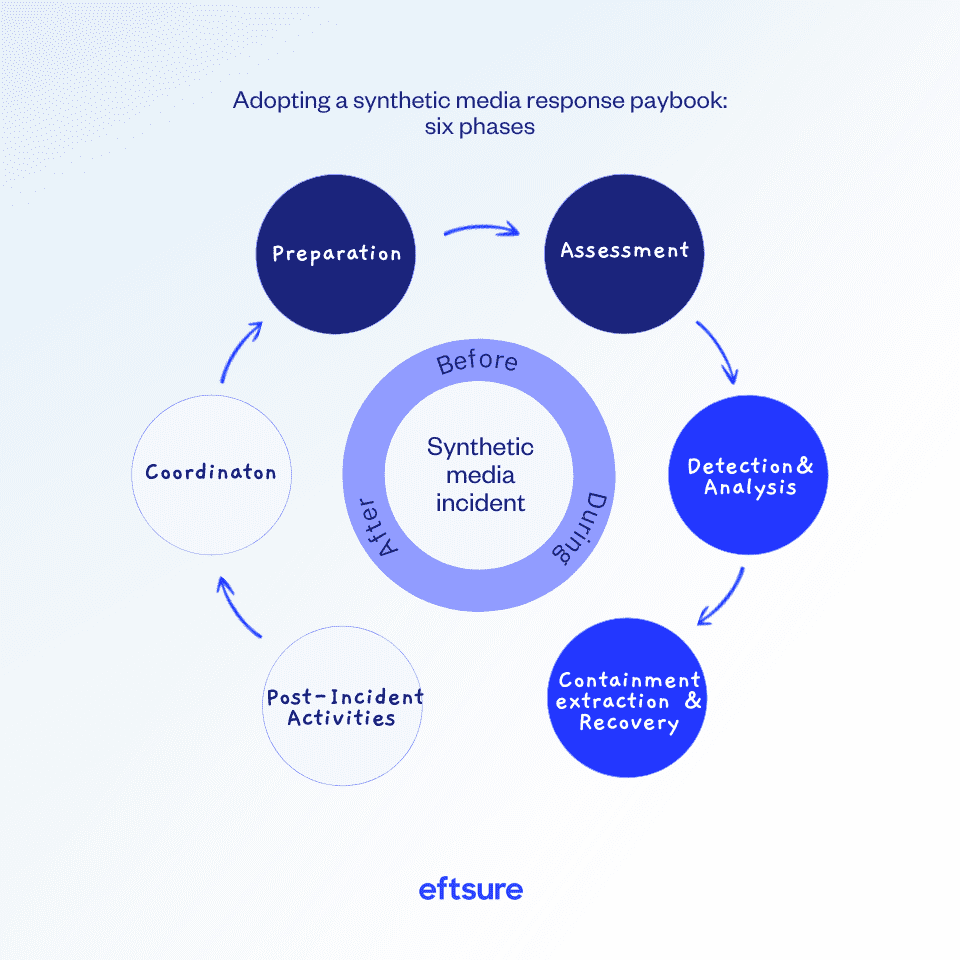

QUT researchers have urged leaders to adopt a synthetic media response playbook, which involves six phases.*

Many of these components overlap with a cybercrime strategy – particularly those around training and assessing threats – which is why it’s crucial to collaborate with leaders across the business to understand and protect against synthetic media risks.

“Firstly, promoting synthetic media incident awareness is essential so the entire organisation is aware of the risks which are presented and the potential infrastructure and psychological vulnerabilities which could

be taken advantage of,” says Whittaker.

“Training and awareness programs will be vital in establishing an organisational culture that understands the risks which synthetic media presents to their operations.”

“A centralised synthetic media response strategy should be the goal of any organisation planning a response,” explains Whittaker. “Enacting this strategy may manifest in different ways depending on various organisational departments. For example, finance could implement enhanced verification protocols such as biometric authentication or two-factor authentication when large or urgent financial transactions are required.”

As part of the preparation and assessment phases, the playbook also includes mapping out your organisation’s “crown jewels” and the assets most likely to attract threat actors, e.g. money.

“Many organisations already understand who has access to their crown jewels, so focusing on providing extra protections for the individuals and shoring up the authentication and approval processes associated with their roles could be a more effective investment of organisational resources,” explains Whittaker.

“It’s paramount to boost the protection of those most at risk of being targeted. That might be through the provision of more extensive educational modules, providing them with devices that have greater in-built security protocols, or requiring more extensive levels of authentication when they need to undertake important actions.” – Lucas Whittaker, QUT PhD candidate and deepfake researcher

We don’t currently know the full extent of AI’s threats (or potential benefits), but we do know that we’re entering a new era – one that will force us to reevaluate how we understand evidence and trust. We must learn to live and work in a world where AI is embedded into everyday life, which includes reassessing our security controls and strategies. Leaders can’t ignore this – technologists and even AI researchers have already issued warnings about its malicious use and have advocated for ethical practices.

However, even with better international regulation of generative AI, finance leaders will still need to adapt to a new reality. After all, cybercriminals are not as bound by laws or regulations and will always look for ways to capitalise on new technology. To stay one step ahead of them, CFOs need to think creatively, stay informed and implement

technology-driven processes.

End-to-end B2B payment protection software to mitigate the risk of payment error, fraud and cyber-crime.